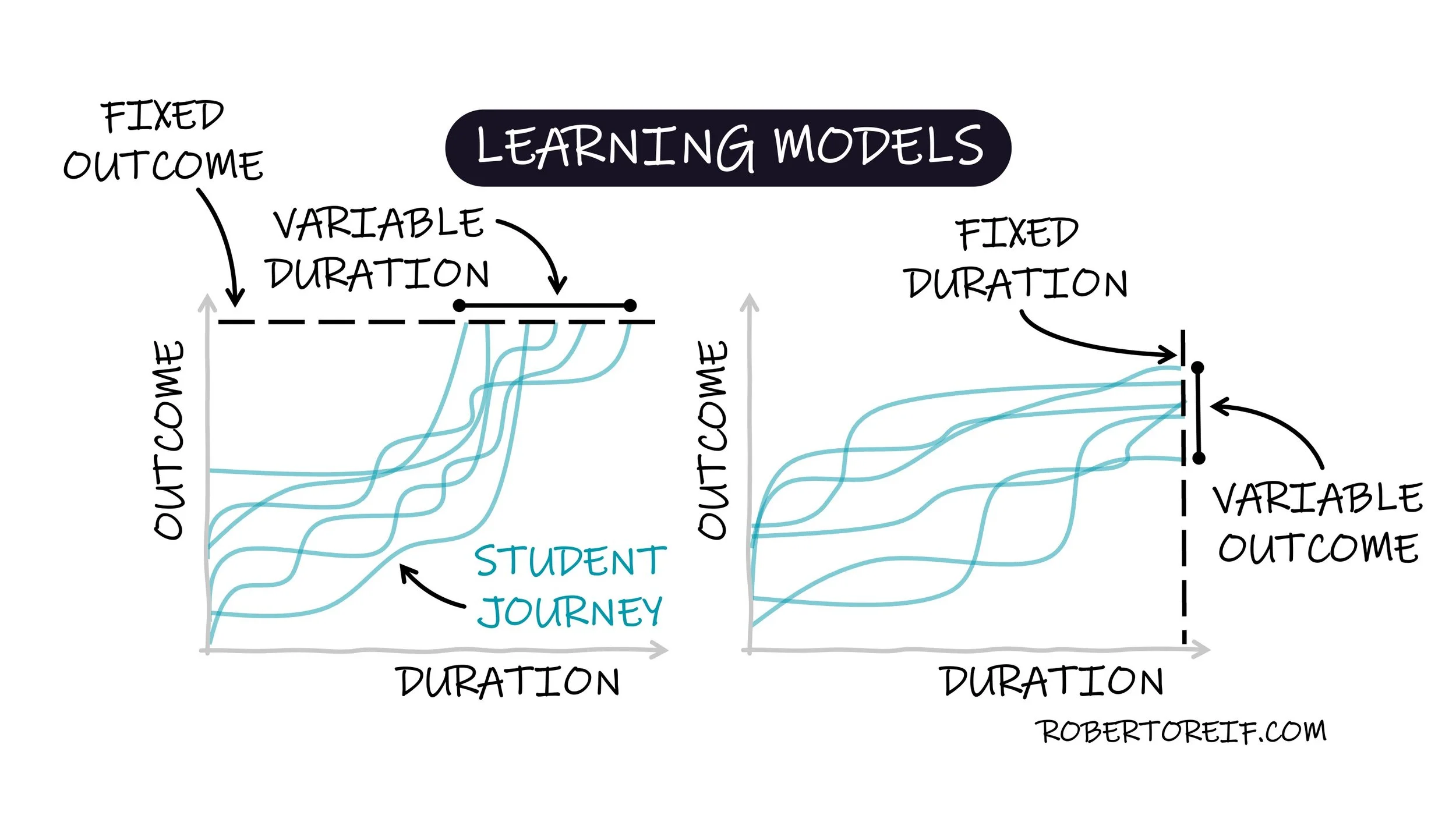

When I teach courses, I often encounter a diverse group of students, each with varying levels of prior knowledge, experience, and learning styles. As they progress, each student builds skills and understanding at their own pace. This diversity highlights two common models in education: Fixed Duration and Fixed Outcome.

In a Fixed Duration model, like a traditional college semester, the course has a set start and end date for all students. Everyone moves through the material on the same timeline, regardless of how quickly or slowly they master the content. Success is measured by whether a student crosses a minimum threshold in performance. As a result, students finish with different levels of mastery, depending on how well they kept up with the pace and grasped the material within the duration of the course.

By contrast, a Fixed Outcome model focuses on guaranteeing that all students reach the same level of competency or skill, regardless of how long it takes them to get there. This is often seen in self-paced online courses. In this format, students can progress at a speed that suits them, where they can revisit challenging concepts as needed until they’ve fully understood them. Consistent learning outcomes becomes the primary goal, and time is a flexible factor.

Both approaches have their merits. Fixed Duration models provide structure and predictability, and Fixed Outcome models, prioritize mastery and can be more personalized and accommodating to individual learning needs.

Which type of program do you find more effective for your learning style?